本篇文章給大家帶來的內容是關于mapreduce的基本內容介紹(附代碼),有一定的參考價值,有需要的朋友可以參考一下,希望對你有所幫助。

1、WordCount程序

1.1?WordCount源程序

import?Java.io.IOException; import?java.util.Iterator; import?java.util.StringTokenizer; import?org.apache.hadoop.conf.Configuration; import?org.apache.hadoop.fs.Path; import?org.apache.hadoop.io.IntWritable; import?org.apache.hadoop.io.Text; import?org.apache.hadoop.mapreduce.Job; import?org.apache.hadoop.mapreduce.Mapper; import?org.apache.hadoop.mapreduce.Reducer; import?org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import?org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import?org.apache.hadoop.util.GenericOptionsParser; public?class?WordCount?{ ????public?WordCount()?{ ????} ?????public?static?void?main(String[]?args)?throws?Exception?{ ????????Configuration?conf?=?new?Configuration(); ????????String[]?otherArgs?=?(new?GenericOptionsParser(conf,?args)).getRemainingArgs(); ????????if(otherArgs.length??[<in>...]?<out>"); ????????????System.exit(2); ????????} ????????Job?job?=?Job.getInstance(conf,?"word?count"); ????????job.setJarByClass(WordCount.class); ????????job.setMapperClass(WordCount.TokenizerMapper.class); ????????job.setCombinerClass(WordCount.IntSumReducer.class); ????????job.setReducerClass(WordCount.IntSumReducer.class); ????????job.setOutputKeyClass(Text.class); ????????job.setOutputValueClass(IntWritable.class);? ????????for(int?i?=?0;?i??{ ????????private?static?final?IntWritable?one?=?new?IntWritable(1); ????????private?Text?word?=?new?Text(); ????????public?TokenizerMapper()?{ ????????} ????????public?void?map(Object?key,?Text?value,?Mapper<object>.Context?context)?throws?IOException,?InterruptedException?{ ????????????StringTokenizer?itr?=?new?StringTokenizer(value.toString());? ????????????while(itr.hasMoreTokens())?{ ????????????????this.word.set(itr.nextToken()); ????????????????context.write(this.word,?one); ????????????} ????????} ????} public?static?class?IntSumReducer?extends?Reducer<text>?{ ????????private?IntWritable?result?=?new?IntWritable(); ????????public?IntSumReducer()?{ ????????} ????????public?void?reduce(Text?key,?Iterable<intwritable>?values,?Reducer<text>.Context?context)?throws?IOException,?InterruptedException?{ ????????????int?sum?=?0; ????????????IntWritable?val; ????????????for(Iterator?i$?=?values.iterator();?i$.hasNext();?sum?+=?val.get())?{ ????????????????val?=?(IntWritable)i$.next(); ????????????} ????????????this.result.set(sum); ????????????context.write(key,?this.result); ????????} ????} }</text></intwritable></text></object></out></in>

1.2 運行程序,Run As->Java Applicatiion

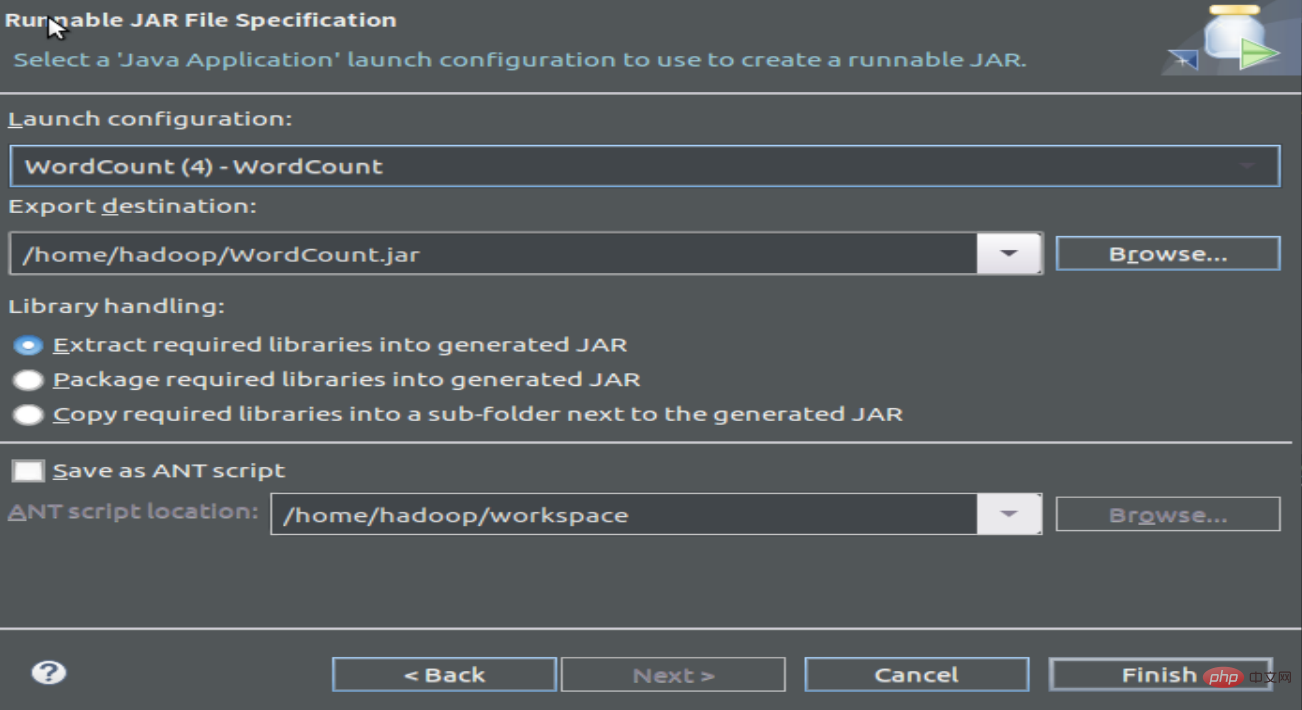

1.3 編譯打包程序,產生Jar文件

2 運行程序

2.1 建立要統計詞頻的文本文件

wordfile1.txt

Spark Hadoop

Big Data

wordfile2.txt

Spark Hadoop

Big Cloud

2.2 啟動hdfs,新建input文件夾,上傳詞頻文件

cd /usr/local/hadoop/

./sbin/start-dfs.sh?

./bin/hadoop fs -mkdir input

./bin/hadoop fs -put /home/hadoop/wordfile1.txt input

./bin/hadoop fs -put /home/hadoop/wordfile2.txt input

2.3 查看已上傳的詞頻文件:

hadoop@dblab-VirtualBox:/usr/local/hadoop$ ./bin/hadoop fs -ls .

Found 2 items

drwxr-xr-x ? – hadoop supergroup ? ? ? ? ?0 2019-02-11 15:40 input

-rw-r–r– ? 1 hadoop supergroup ? ? ? ? ?5 2019-02-10 20:22 test.txt

hadoop@dblab-VirtualBox:/usr/local/hadoop$ ./bin/hadoop fs -ls ./input

Found 2 items

-rw-r–r– ? 1 hadoop supergroup ? ? ? ? 27 2019-02-11 15:40 input/wordfile1.txt

-rw-r–r– ? 1 hadoop supergroup ? ? ? ? 29 2019-02-11 15:40 input/wordfile2.txt

2.4 運行WordCount

./bin/hadoop jar /home/hadoop/WordCount.jar input output

屏幕上會輸入大段信息

?然后可以查看運行結果:

hadoop@dblab-VirtualBox:/usr/local/hadoop$ ./bin/hadoop fs -cat output/*

Hadoop 2

Spark 2